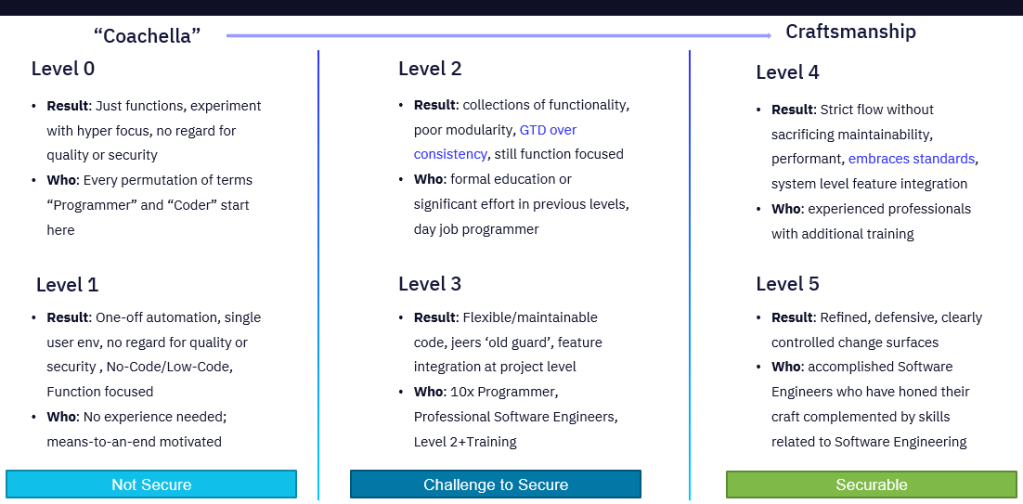

Aristotle’s account of a first principle (in one sense) as “the first basis from which a thing is known” (Met. 1013a14–15) – Wikipedia

Thinking about the first principle of software engineering as the essence of the practice helps us understand how to align our expectations and choose strategies that are appropriate. It also helps us think of Software Engineering as a discrete discipline that requires skill and experience. It is not a shallow practice producing lines of code in a vacuum. It is more like producing well thought out lines of code to complete a valuable idea for solving a problem. This value depends on various attributes to be fit to be a part of or a whole solution. Further, value in software is often not a single point in time. Value multiplies over time. Short-sighted additions die on the vine and require twice the work to revive later.

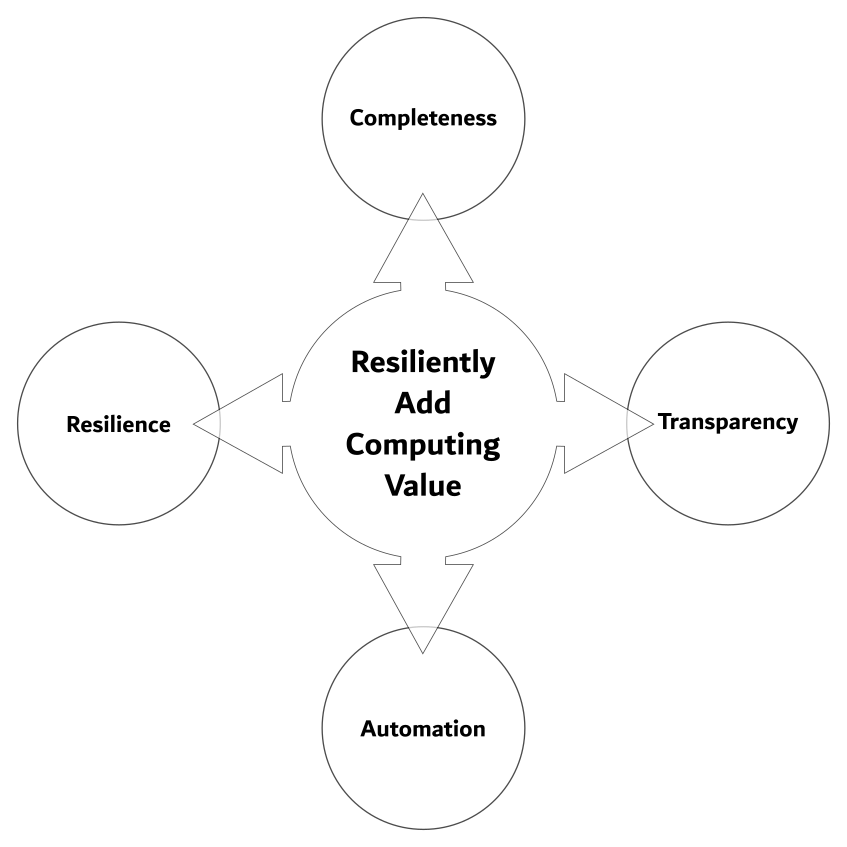

Therefore, the essence of Software Engineering is to:

Resiliently Add Computing Value

It is easy to see that disposable code does play a role in the IT landscape. However, it has less value to a Software Engineer. The first principle indicates a goal of accumulating value in a solution or system through modification, preferably enhancement and not repair. Although, if you are inheriting a system that has more value in the idea than execution, you are forced to start at a disadvantage.

Baseline First Principle Strategies

After stripping it down to its essence, we can identify the most fundamental Software Engineering strategies. These are Resilience, Completeness, Transparency and Automation.

Let us expand on these concepts and explain how they may relate to security concerns.

Resilience

Resilience stands out as an obvious first choice given it is found in the first strategy statement. Resilience describes an application’s ability to keep running predictably under unfavorable circumstances and load. It also describes how software should fail gracefully without corrupting or exposing data or causing any other damage. As well as it indicates recovery from such failure.

You may recognize that Resilience as a strategy for Availability. It is also how we deal with injection, timing attacks, enumeration and various other attacks that leverage glitching. We will cover various tactics to address Resilience, like Defensive Coding and Dependency Management.

Completeness

Another baseline strategy supporting the first principle is Completeness. This describes addressing implicit requirements in a way that creates a cohesive solution. Each context brings with it certain attributes that must be addressed. Sometimes this involves complementary solutions as in the case of Authorization and Authentication to support multi-user contexts. Further, authorization would completely cover sensitive data without “insecure direct object references”.

Completeness means we maintain confidentiality and integrity. We will cover aspects of Completeness with the sections on Auth, Square, Requirements Mastering and Testing.

Transparency

Transparency is something that has become particularly important in development processes, but it is invaluable at the code level. There are certain situations that require being specific and explicit. Rarely, if ever, is there value in obscurity, especially when speaking of security. Further, a transparent system is a supportable system.

Transparency describes the need to enforce non-repudiation. It can be used to guide what we need to log. Transparency helps us measure how resilient the system is.

Automation

In a sense, automation is the most basic of computing principles. It is why computing is valuable. It is also important to modern process management. Automation explains the need to document and version control processes. For many, automation enables the “always be practicing” philosophy that is a precursor to Continuous Integration and Continuous Deployment. Further automation limits a process’s exposure to failures due to differences between executions.

Ultimately, Automation is a first-level strategy shared directly with security. The ability to repeat a process with highly controlled change between executions is nearly priceless if the process is transparent enough that failures can be accounted for in subsequent execution. This allows an iterative approach to complete problem domain coverage. Resilience can also be enhanced as new and unforeseen circumstances emerge. This focuses effort on progress without wasting it on activities that would otherwise need repeating.

Conclusion

I encourage you to use the First Principle to develop a organizational mindset that embraces these stratigies at their most fundamental level.

In a time when we are on the cusp of a code explosion in much the same way we had an explosion of networks with the popularity of cloud, it is important to establish this complete Software Engineering mindset. Once such an understanding is positioned, we can build strategies for quality secure solutions to distinguish ourselves from the deluge of low brow software that is knocking at our door.